Beyond Redundancy: Understanding, Implementing, and Optimizing High Availability in IT Systems

Downtime is a nightmare in today's digital economy, as businesses depend more and more on their IT infrastructure to provide services around the clock. Due to a few hours of downtime, one of the big tech firms recently lost $100 million in income and millions of subscribers to its rival. It gets worse for small and midsize enterprises (SMBs) with constrained funding and resources. Long-term outages can be SMBs' death knell, driving them out of business.

Ensuring the high availability of your IT systems and infrastructure is one of the most effective strategies to reduce the risk of downtime. Although it is almost impossible to entirely eliminate downtime, adhering to the principles of high availability can guarantee that your network will continue to function in the case of an IT disturbance or outage.

In this article, we will cover the following topics related to high availability:

- What is High Availability?

- Why is high availability essential in IT?

- How does high availability ensure system resilience?

- What metrics measure system availability?

- What are the best practices for high availability implementation?

- How do high availability and disaster recovery align?

- Differences: High availability vs. fault tolerance?

- What are high-availability clusters, and how are they used?

- Role and use cases of high availability software?

- Adapting high availability strategies for cloud computing?

- Critical components of high-availability infrastructure?

- How Does High Availability Work in Firewall Systems?

- How do I configure high availability on opnsense with Zenarmor?

- What are the emerging trends in high availability?

What is High Availability?

The capacity of a system to function continuously without experiencing a breakdown for a certain amount of time is known as high availability (HA). A system's compliance with a predetermined operational performance level is ensured by its high availability. A popular yet challenging availability benchmark in information technology (IT) is called five-nine availability, which denotes that the system or product is accessible 99.999% of the time.

Systems with high availability (HA) are employed in sectors and circumstances where system uptime is crucial. Autonomous vehicles, industrial, healthcare, and military control systems are examples of high-availability systems in practice. These systems must always be operational and ready to protect people's lives. For instance, an autonomous vehicle's operating system malfunctioning during operation might result in an accident that puts the lives of its occupants, other motorists, pedestrians, and property at risk.

Highly available systems must be well developed and assessed before being used. It is necessary for all components to achieve the specified availability criteria in order to plan for one of these systems. The ability to backup and failover data is crucial to ensuring that HA systems achieve their availability objectives. The technologies that system designers choose for data access and storage must be carefully considered.

Why is High Availability Essential in IT?

Systems that impact people's health, financial security, and access to food, housing, and other necessities of life are frequently those that need to be operational most of the time. Put another way, they are parts or systems that, should they operate below a predetermined level, will significantly affect a company or the lives of its employees.

Autonomous cars are obvious candidates for HA systems, as was previously indicated. For instance, a self-driving car would crash if its front-facing sensor malfunctioned and interpreted an 18-wheeler's side as the road. In this case, the automobile was functioning, but it would have been a terrible accident since one of its components did not achieve the required level of operational performance.

Another area where HA systems are essential to people's lives is electronic health records (EHRs). In order to gain a complete picture of the patient's medical history and choose the best course of action when a patient arrives at the emergency department in excruciating agony, the doctor requires immediate access to the patient's medical data. The patient, does she smoke? Do they have a history of cardiac issues in their family? What more drugs do they take? Responses to these inquiries must be sent right away; system outages cannot cause them to be delayed.

How does high availability ensure system resilience?

When your system, apps, and network go down, high-availability technologies make sure that company activities continue completely transparently to consumers and users. A technological system's HA component removes single points of failure to guarantee uninterrupted operations or uptime for a prolonged period of time. Five design concepts are integrated into highly available systems:

- push-button failover and failback for scheduled maintenance,

- automated failover,

- automatic detection of application-level failures,

- no data loss, and

- fast and quick failover to redundant components.

What metrics measure system availability?

A system's availability can be gauged by comparing it to a state of 100% functioning, continuous failure, or no outages. An availability percentage is often computed using the formula below:

Availability is calculated as (monthly minutes - monthly downtime) * 100/monthly minutes.

The following three measures are used to gauge availability:

- The anticipated interval between two system failures is known as the mean time between failures, or MTBF.

- The average amount of time a system is unavailable is called mean downtime, or MDT.

- Recovery time objective (RTO) or anticipated time of repair, is the entire amount of time required to recover from an unexpected outage or conduct a scheduled outage.

These metrics can be employed by service providers or internal systems to guarantee clients a specific degree of service as outlined in a service-level agreement (SLA). SLAs are agreements that outline the percentage of availability that users of a system or service can anticipate.

Metrics measuring availability are interpreted differently depending on what the end user considers to be the system's or service's availability. Performance issues might make people decide that a system is useless even if it is still working in part. Even with this degree of subjectivity, SLAs give a specific form for availability indicators, which the system or service provider must meet.

The end user should anticipate that a system or SLA with 99.999% availability may experience service outages for the following durations:

| Time period | The time system is unavailable |

|---|---|

| Daily | 0,9 seconds |

| Weekly | 6.0 seconds |

| Monthly | 26.3 seconds |

| Yearly | 5 minutes and 15,6 seconds |

Table 1. Service outage duration for 99.999% availability

To put things in perspective, a corporation that follows the three-nine standard (99.9%) will have around 8 hours and 45 minutes of system downtime annually. With a two-nine norm, downtime is considerably more noticeable; 99% availability is equivalent to a little over three days of downtime annually.

What are the best practices for high-availability implementation?

To reduce disruptions for the end user, a highly available system should be able to bounce back from any kind of failure condition quickly. The high-availability best practices are as follows:

- Remove all nodes or single points of failure that may affect the system in the event that it malfunctions.

- Make sure that all data and systems are backed up for quick and simple recovery.

- To divide up network and application traffic among servers or other devices, use load balancing. HAProxy is an illustration of a redundant load balancer.

- Maintain a close eye on the back-end database servers' condition.

- Distribute resources across several geographic areas in the event of a natural catastrophe or power outage.

- Put dependable failovers in place. A storage area network (SAN) or redundant array of independent disks (RAID) are popular methods for storage.

- Install a system that can identify malfunctions as soon as they happen.

- Before putting the system into service, verify its functionality and design the components for high availability.

How do high availability and disaster recovery align?

A component of security planning called disaster recovery (DR) aims to recover from a catastrophic incident, such as a natural catastrophe, that damages the data center or other infrastructure. DR is about putting a strategy in place for when a system or network fails and dealing with the fallout that has to be dealt with. Conversely, HA techniques address smaller, more localized errors or failures than that.

Infrastructure and tactics implemented for DR and HA share many similarities. In a disaster recovery situation, backups and failover procedures are relevant. All essential components of high-availability systems should have access to them. Servers, storage units, network nodes, satellites, and complete data centers are a few examples of these parts. The system's infrastructure must include backup components. For instance, an organization needs to be able to move to a backup server in the event that the database server fails.

Data backups are necessary in a HA system to preserve availability in the event of corruption, loss, or storage issues. To guarantee data resilience and speedy recovery from data loss, a data center should have automated disaster recovery procedures in place and store data backups on redundant servers.

Differences: High availability vs. fault tolerance?

Fault tolerance contributes to high availability, much like DR does. The capacity of a system to withstand, predict, and react automatically to malfunctions in its operations is known as fault tolerance. Redundancy is necessary for a fault-tolerant system to reduce disturbance in the event of a hardware breakdown.

IT companies should use an N+1, N+2, 2N, or 2N+1 approach to achieve redundancy. Let N be the number of servers, for example, required to maintain the system's functionality. An extra server is required in addition to all the servers required to execute the system in an N+1 model. Two times as many servers would be needed for a 2N model as the system typically requires. Using a 2N + 1 strategy entails using twice as many servers as necessary plus an additional one. Mission-critical components are guaranteed to have a minimum of one backup thanks to these measures.

A system may be highly available yet not fault-tolerant at the same time. For instance, the hypervisor may attempt to restart the virtual machine in the same host cluster if an HA system encounters difficulties hosting the VM on a server in a cluster of nodes but the system is not fault tolerant. This is probably going to work if the issue is software-related. Restarting the cluster in the same cluster won't solve the issue if the hardware of the cluster is the issue, though, as the virtual machine is housed in the same malfunctioning cluster.

In the same scenario, a fault-tolerant method would likely implement an N+1 strategy and restart the virtual machine on a new server in a separate cluster. Zero downtime is more likely to be guaranteed with fault tolerance. To guarantee that there is a duplicate of the complete system somewhere else to utilize in the case of a disaster, a DR strategy would go one step further.

What are high-availability clusters, and how are they used?

A computer system type called a high-availability cluster is made to guarantee that customers may access essential services and applications with the least amount of downtime possible. It is made up of several servers, or nodes, that are set up to cooperate in order to deliver a single, cohesive service or application. In the event that a node fails, the other nodes take over to guarantee that users may still access the service or application.

High-availability clusters come in several forms, such as active-passive, active-active, and hybrid clusters:

- Active-Passive Clusters: One active node that responds to all queries and one or more passive nodes in standby mode make up an active-passive cluster. The passive node(s) take over and become the active node(s) in the event that the active node fails. Although this kind of cluster is straightforward and quick to set up, if the failover procedure takes too long, it may result in downtime.

- Active-Active Clusters: Many active nodes handling requests concurrently make up an active-active cluster. Although this kind of cluster can scale and perform better, it might be more difficult to set up and maintain.

- Hybrid Clusters: The components of both active-passive and active-active clusters are combined in a hybrid cluster. Usually, it consists of one or more standby inactive nodes and one or more active nodes that respond to queries. The passive node(s) take over and become active in the event of an active node failure, offering failover protection.

Numerous settings, such as web servers, databases, and mission-critical applications, require high-availability clusters. They are a crucial instrument for guaranteeing the uninterrupted functioning of apps and services, as well as for preventing data loss and outages.

Clusters frequently require specialized hardware and software, such as redundant power supplies, storage area networks (SANs), and load balancers, to provide high availability. They can also keep an eye on the nodes' health and start a failover if needed by using failover protocols like the Heartbeat protocol.

To put it briefly, a high-availability cluster is a system built to guarantee that customers can access key services and applications with as little downtime as possible. It is made up of many servers, or nodes, that are set up to cooperate and offer failover protection in case one of the nodes fails. High-availability clusters are a valuable instrument for guaranteeing the uninterrupted functioning of services and applications, and they are employed in many settings.

Role and use cases of high availability software?

In high-availability systems, a number of servers or nodes cooperate to deliver a smooth, continuous service. Real-time failure detection and automated traffic redirection to the surviving nodes are features of these systems. This guarantees that, even in the case of a hardware malfunction or other technical problems, users may keep using the service or application without interruption.

A load balancer is a widely used technique for achieving high availability by distributing traffic among several servers. The load balancer can automatically reroute traffic to another server in the event that one fails. Furthermore, data may be duplicated across several servers or data centers, reducing the chance of data loss in the event of a failure and offering redundancy.

The following sectors and use cases benefit from high availability:

- E-commerce: To guarantee unbroken access to their websites, uphold real-time inventory systems, and seamlessly process client transactions, online merchants must prioritize high availability.

- Financial services: To guarantee transactional integrity, offer constant access to banking services, and protect client data, financial organizations must have high availability.

- Healthcare: To guarantee continuous access to patient information, medical imaging systems, and vital monitoring equipment, high availability is essential to healthcare systems.

- Government and public sector: In order to preserve connectivity, provide e-government services, and protect citizen data, government agencies depend on high availability.

Adapting high availability strategies for cloud computing?

To achieve high availability in the cloud, organizations need to automate their infrastructure, provide redundancy to their systems, and remove single points of failure. This may be achieved by combining failure detection, clustering, and load balancing.

Additionally, a range of services are offered by cloud service providers. Enterprises may achieve high availability with the use of automated failover capabilities, disaster recovery planning tools, backup and recovery services, and more.

Organizations must adhere to the following best practices in order to achieve high availability in the cloud:

- Plan for Mistakes: Consider system failure while designing your infrastructure.

- Employ Redundancy: Ensure that your systems have redundancy so that another can take over in the event that a component fails.

- Automate Everything: Automate your infrastructure to guarantee consistent configuration and lower the possibility of human error.

- Test Frequently: To make sure your redundancies and systems are operating properly, test them frequently.

- Keep an eye on and Warn: Keep an eye on your infrastructure and configure alerts to spot any problems before they become serious ones.

Critical components of high-availability infrastructure?

An IT architecture with high availability is supported by the following elements:

- Redundancy: In a high-availability cluster, redundant hardware, software, applications, and data allow another component to take over and complete the work in the event that one IT component, such as a server or database, fails.

- Replication: Replication is equally essential to attaining high availability as redundancy. In a high-availability cluster, nodes need to exchange information and interact with one another in order for any node to take over in the event that a server or network device it supports fails.

- Failover: A failover site off-site is another essential part of an infrastructure designed for high availability. In the event that the primary system fails, it permits network traffic to be switched to the failover system.

- Load Balancing: In high-availability clusters, load balancing is crucial to prevent any one server from ever being overburdened with requests. No matter how many server requests you receive, load balancers route traffic and keep an eye on the health of servers to guarantee that your system is always accessible.

How Does High Availability Work in Firewall Systems?

A firewall system that guarantees network protection even in the event of a firewall device failure is known as a high availability (HA) firewall. Redundancy eliminates single points of failure and offers constant security coverage. In contrast, single firewall configurations may result in protracted downtime in the event that devices malfunction.

High-availability firewalls provide the following essential features:

- Redundant firewall devices: Multiple firewall peers cooperate within HA firewall systems to offer constant protection. Another name for this is HA clustering.

- Automatic failover: The system instantly switches to a backup firewall device in the event that the primary one fails. The network is kept safe thanks to a smooth failover procedure. There is never a single point of failure with HA systems.

- Load balancing: Network traffic is split up across several firewalls by HA systems. In addition to ensuring that no one device becomes a bottleneck, this enhances performance.

- Stateful failover: HA firewalls keep network connections in their current state. The new firewall device can continue where the old one left off in the event that one goes down.

What are best practices for HA firewalls?

Firewalls with high availability provide significant advantages. However, in order to optimize their efficacy, HA systems need to be properly deployed and maintained. The following are some best practices for implementing high-availability network architectures:

- Utilize redundancy design strategically: Mission-critical assets should be the primary focus of HA firewalls' coverage. Every day, business services are delivered using these apps and data containers. Give redundancy top priority for tasks that are most important to the firm. Any firm that fails in these areas will pay a heavy price.

- Include failover in the design of your network: Failover procedures must be included in every HA configuration. By doing this, network disruptions are prevented, and firewalls and other security technologies are kept operational. Additionally, it facilitates network traffic management. Fail-over procedures redirect traffic to other active nodes in the network in case one or more of the traffic flows fail.

- Consider geographical redundancy: Many businesses have many sites that are operational. Provide HA systems that safeguard infrastructure in the event that a single local network fails. Ascertain that active nodes provide firewall protection for every network user.

- Put load balancing to use: The enterprise network can be made load balancing capable using active/active or N+1 HA solutions. In the event that an active node fails, traffic loads are balanced to provide smooth failover. Devices with active coverage should automatically receive traffic from broken firewall nodes. This raises the degree of fault tolerance for service apps, creating an "insurance policy.".

- Align your HA firewall with the RPO goals: The majority of businesses use a recovery point objective (RPO) of little more than 60 seconds. Adjust the heartbeat connection and active firewall settings to match the RPO you have selected. A low RPO reduces data loss in the case of a network outage, which is a crucial benefit of using high-availability systems.

How do I configure high availability on OPNsense with Zenarmor?

One noteworthy functionality of OPNsense is its capacity to deploy a failover option for a redundant firewall in an automated fashion. In order to facilitate hardware failover, OPNsense implements the Common Address Redundancy Protocol (CARP). By configuring two or more firewalls, a failover group is formed. In the event of an interface failure or complete downtime of the primary firewall, the secondary becomes operational. You may easily configure high-availability on your OPNsense systems.

By leveraging this robust OPNsense feature, it is possible to implement an entirely redundant firewall system that switches seamlessly and automatically. Users can anticipate minimal disruption to their connections while the secondary network is implemented. Each cluster node in an OPNsense cluster setting has to have the Zenarmor plugin installed individually. To get the benefit of the high availability feature, you need to have a Zenarmor Business Edition.

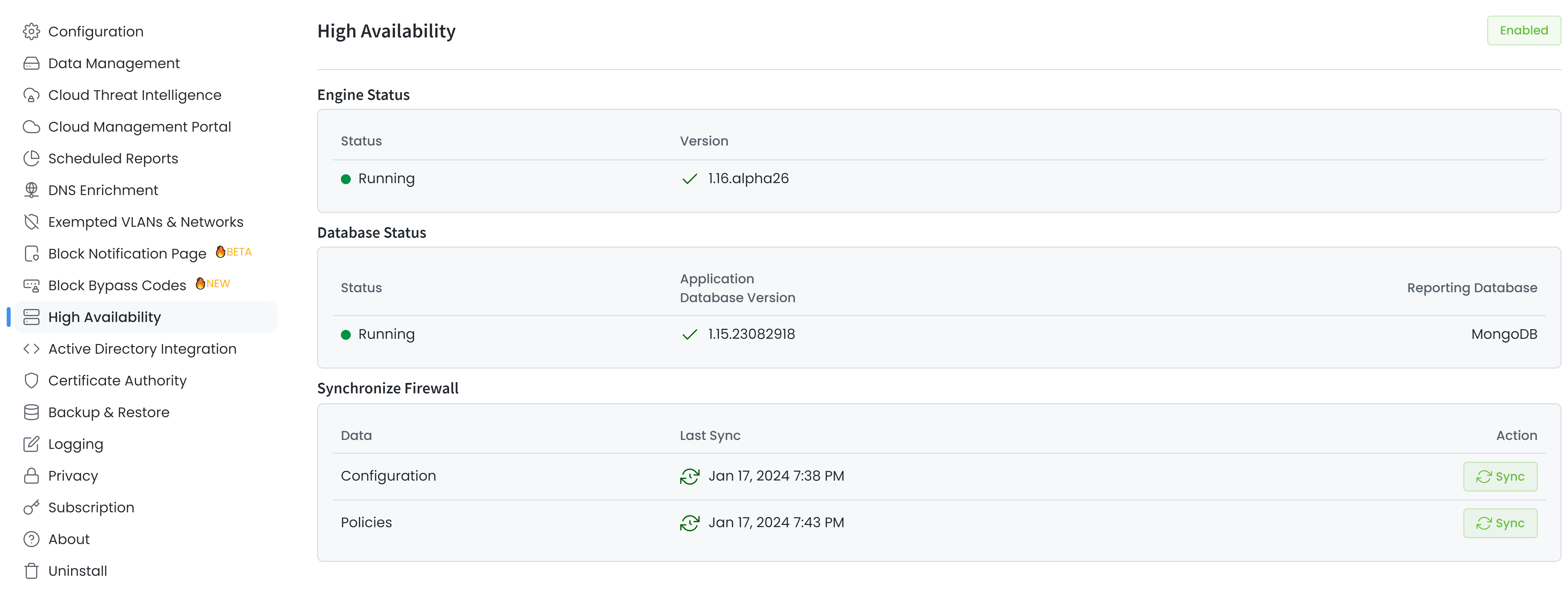

By navigating to Zenarmor → Settings → High Availability on the OPNsense GUI, you can view the Zenarmor versions on the firewalls.

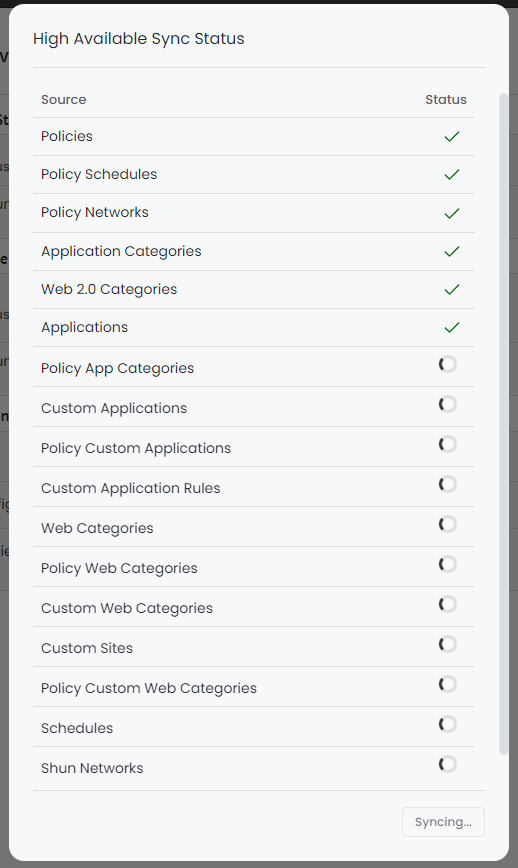

A warning notice stating that you are working on a cluster system and that system settings should be synchronized shows on the screen when you modify the Zenarmor configuration and rules on the primary OPNsense. Clicking the Sync button in the notification message will start synchronization.

You may examine the specifics of the Zenarmor versions and Zenarmor service status on the backup firewall if you have an OPNsense cluster firewall. Additionally, you may verify if the policies and configurations match those of the secondary firewall.

Figure 1. Zenarmor High Availability page on a master firewall.

On the high availability page, you can observe the synchronization status of Zenarmor Configuration and Policies with the secondary firewall. And start the synchronization process by clicking on the Sync button on the Synchronize Firewall pane.

Figure 2. Synchronization of Zenarmor Configuration and Policies with Backup Firewall

What are the emerging trends in high availability?

Emerging technologies are crucial to the constantly changing high availability world. The dependability of HA systems should increase with the incorporation of AI and machine learning for automation in system redundancy and predictive analytics. Furthermore, utilizing blockchain technology is starting to become popular as a way to guarantee data availability and integrity across dispersed networks.

High Availability in the Edge Computing Era is poised to emerge as a new frontier with the introduction of edge computing. By moving processing closer to the data source, edge computing may greatly increase reaction times and availability. However, it creates additional difficulties in maintaining and controlling HA systems in more dispersed and dynamic situations.

In conclusion, in the digital age, high-availability solutions are not just a technical necessity but also an economic imperative. In order to accommodate organizations' changing demands and guarantee that they can continue to run without interruption despite whatever obstacles they may encounter, HA systems will need to be continuously improved and adjusted.